How Much Electricity Does A Computer Use?

Advice and Guide

As we are living in Information Age, computer usage is inevitable. Sometimes when our electricity bill goes up at high peak, we do wonder how much does a computer cost us for using them. However, finding the answer to this question can be a somewhat complicated task. The amount of electricity a computer uses depends upon what type of hardware you have and what applications you are running.

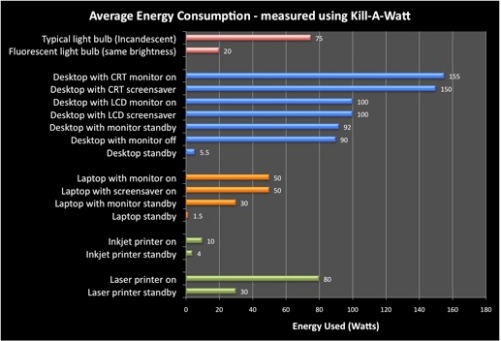

For standard computer they might uses around 100 to 250 watts, as for high end computer they are likely to consumes 250 to 800 watts. A typical CRT (the big computer monitor) takes about 80 watts give or take a few. A typical LCD (small flat cool looking monitors) uses about 35 watts give or take a few. Refer formula below on how to calculate computer wattage usage. You need to sum up your Monitor with your Computer before doing the calculation.

To calculate your costs use this formula:

Watts x Hours Used

________________ aX Cost per kilowatt-hour = Total Cost

…………..1000

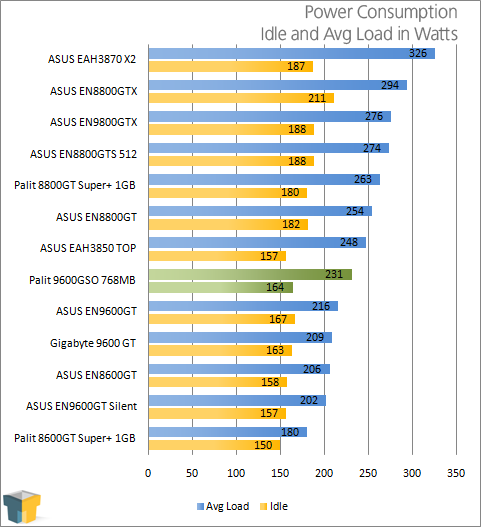

Gaming computers is considered as a high machine because for gaming we need a better graphic card or even dual graphic card. n SLi or Crossfire computer with two video cards can boast up the wattage usage.

More hard disk also mean more drive is spinning around your computer while make you electricity meter spin faster. If you are running on dual 3 x 160 GB hard drive consider using an 500 GB single hard disk. This mean better electricity cut down but one drive also means that if one drive is collapse you might lose everything.

Processor are also is a big factor for overall wattage usage. Powerful processor like Intel i7 and AMD Phenom may consume extra wattages. If you are running on Intel Atom, just forget your bill problem because this hardware will never make it in your top ten most wattage usage devices in your house.

If you want to calculate accurately consider purchasing a kilowatt meter or energy meter.

March 17th, 2010 at 8:40 pm

I’ve recently been an enthusiastic lover of this web site for a while but not really provided nearly anything back, I am hoping to improve that later on with an increase of chat.Thanks for another great inclusion on the web site.